Difficult Decisions

The people of the UK will soon need to decide if they want to leave the EU. To make this decision, each person needs to weigh up a tumble of possible consequences of staying or leaving: will they be better off, what will happen to immigration, what about security? This is the kind of judgment call, having to predict what might happen in an uncertain future, that characterises all kinds of personal and business decisions.

Folks’ natural inclination in these circumstances is to consult an expert, either in person or in an editorial column, or to fall back on some firmly held belief about how the world works. One of these approaches has a track record of diagnostic success roughly equal to a chimp throwing darts at a board containing the different scenarios, the other approach is a bit worse. But there is another way, which has a much better track record than either of these 2 common approaches.

Who Are the Best Forecasters?

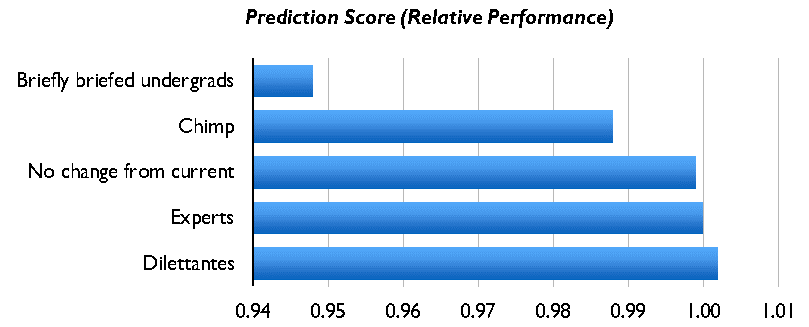

Here is an analysis[1] that tested how accurately different groups predicted a series of important political events, such as the break up of the USSR and leadership changes in a post apartheid South Africa.

The first thing to notice is that the average well-informed human’s predictions are barely better than assuming nothing changes. The second thing is that intelligent people who take time to inform themselves, or “dilettantes”, predict a bit better than experts do. Experts are typically surer of themselves and tend to be overconfident on the extremes, being more sure that something will happen when it doesn’t, and being more sure something won’t happen when it does.

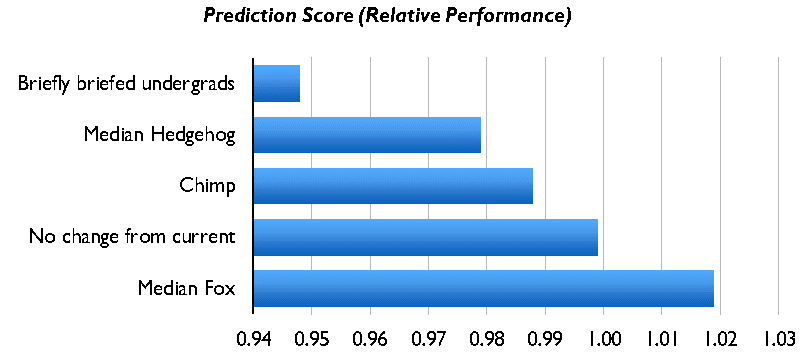

Using the same study to look deeper, at characteristics of people who make the best and worst forecasters, reveals a super useful insight. Lots of factors make no difference: age, education, technical expertise beyond a fairly basic level, political leaning, optimism versus pessimism, and idealist/realist worldview. But one trait that does distinguish good and bad forecasters is this: the willingness to consider a range of viewpoints and facts, formulating views based on that perspective and evidence rather than any pre-conceived beliefs. People with this openness are termed foxes in the study; people with more determination to stick to their worldview are termed hedgehogs. The foxes were much better forecasters than the hedgehogs.

Worryingly, those hedgehogs look like the politicians and expert ideologues filling the airwaves with elegant and consistent theories; the ones many people rely on when forming their own judgments to make important decisions. They also look like visionaries and business gurus with timeless, universal success principles. The median hedgehog is worse at judging outcomes of important events than a dart throwing chimp.

So How do Good Forecasters do it?

In an ongoing real life study[2], volunteer dilettantes, who work just like the foxes in the previous study, have outperformed every other competitor group in formal forecasting competitions, including university departments and government intelligence analysts with access to privileged information. They don’t just beat every competitor, they beat them easily, every year. These “superforecasters” have no specialist expertise; their only common factor is their mindset and a common approach:

- Clearly define the problem, being careful not to substitute it for an easier one

- Break this bigger problem down into components small enough to analyse clearly

- Use the best, most relevant external data, and internal reasoning, to test each component

- Share that reasoning and evidence with other people to test whether it is robust, whether you’ve missed or misunderstood anything, inviting challenges and improvements

- Adjust the answer as relevant new evidence emerges

- Once the event has come to pass, measure and get feedback about how accurate you have been, learn, and adjust for next time

If this looks to you like good critical thinking that anyone can learn, that’s because it is. It doesn’t require genius, years of specialist subject matter expertise, privileged inside information, or any secret formulas. Good, robust critical thinking just needs a disposition to be open and challenge, some simple training and a bit of regular practice.

For a free introduction to critical thinking, sign up at www.kardelen.training/logic-tree/

[1] Expert Political Judgment, Philip Tetlock, , 2005. I have changed the term “Contemporary base rate” to “No change from current” to ease understanding. I also excluded tested models (cautious case specific extrapolations, aggressive case specific extrapolations, and autoregressive distributed lag models), which perform better than humans where they are available

[2] Superforecasting: The Art & Science of Prediction, Philip Tetlock & Dan Gardner, 2015